I’m quite a bit late to setting my goals for the year. Yikes, March arrived so quickly. I wanted to take some time to put on paper (digital paper) what technologies I’m wanting to explore this year. Although I already shared some habits I’m working on, I didn’t really cover anything I want to accomplish in software. So for this post, I will be sharing a comprehensive list of every technology that I’m wanting to learn this year. I don’t believe that I’ll be able to accomplish every item on the list, because it may be too much. But I would rather be hopeful.

I’m going to break each technology down into categories that I think make the most sense and share each technology within them. I will also share my motivation for learning them and maybe some ways I’ll go about using them in projects. If you want to follow my learning on any of this, I keep most of my repos public on my GitHub. Lastly, these aren’t in any order, other than the categories I thought to group them in. Languages felt like a natural place to start, followed by web technologies, and ending with tools and miscellaneous items.

🤬 Language Technology

The first type of technology that I want to add to my repertoire is languages. Since I started programming, I’ve had the opportunity to use a lot of great languages, spending most of my time with: JavaScript, PHP, Elixir, Elm, C#, and other web languages (HTML, CSS, etc). Because I have spent a lot of time in the front-end in the last two years, I would like to focus my attention on more application or back-end technologies.

🧪 Elixir

This one is a bit of an oddball. I actually started learning Elixir back in 2020 and am using it in my full-time position at InfluxData/InfluxDB. In this time it has quickly become one of my favorite languages to use. If you aren’t already familiar, Elixir is a language built on top of the Erlang language and its VM, BEAM. Elixir is a functional programming language, which helps to write more reliable code because all functions are pure and data is immutable. I’ve also had the opportunity to build some apps that were able to process a large amount of data concurrently because of Elixir’s processes. I really can’t say enough about how awesome of a language it is. On top of that, it just has a nice syntax.

I’m putting it on the list because I still feel like I have a lot to learn in terms of architecting an application and using the common libraries for the language. This year I would like to spend some more time with the Phoenix framework and build some applications using Phoenix’s LiveView. I think LiveView has a good shot of replacing JavaScript in some applications, so I would love to get my hands on it.

⚙️ Rust

I first heard about Rust from a blog post by Discord. I honestly don’t know that much about Rust, considering how much I’ve heard about it. The ecosystem and dev tooling appear to be excellent. But mostly, I have heard that the compiler is extremely helpful and friendly. I’ve become accustomed to the errors provided by the Elm compiler; getting that on the backend sounds great. It’s not a functional language, but it does promise reliability through a strict type system and memory management.

I’ll likely try Rust on a web project first. It is supposed to have good WASM support, so I’ll definitely try a project with that. After that, I’d like to give it a go with a hardware project. In college, I studied Electrical & Computer Engineering, and I’ve had an itch to apply some of that knowledge to something physical. At this point, I think I’ll create a device for performing music. I may give a go at writing rust for Arduino. Honestly, a better language that worked well with lower-level and hardware technology would be nice.

ƛ Haskell

Haskell is a bit of a stretch goal. But, since I love Elm so much, it seems natural to learn the language the compiler is written in. Haskell is a statically-typed, functional language. No other language gives me the confidence in deployment like Elm does. And I’m hoping Haskell will give me the same confidence because it also makes you handle all side-effects.

I would like to try a Haskell web project, which will mostly likely be a game. I anticipate it working extremely well for an event-driven game. Elm will almost certainly be the front-end. I think its animation support is incredible. So we’ll see how this one goes. It’s a wishlist thing to learn, so fingers-crossed, I’ll be able to learn it. 🤞

🌏 Web Technology

This next category has two web technologies that I’ve dipped my toes in, but haven’t had the opportunity to use in a real application. I work as a software engineer, and have been deeply involved in web development for more than decade. I’m neglecting WASM from this list of technology, because I don’t plan on investing a lot of time into that, specifically. However, I think it’s likely that I’ll use it while working with Phoenix, Haskell, and maybe Rust.

🔌 Websockets

Websockets are the future. Or so I’ve been told 😜. Websockets, if you’re not already familiar, break the HTTP request/response lifecycle by allowing two-way communication. With websockets, messages can now be sent from the from the server to the client and handled in a callback. I’ve had the opportunity to use them in some experiments. But I’ve never used them in a production application.

I’m hoping to get some experience using Websockets this year. I’ll most likely be using Phoenix Sockets in Elixir, since that’s where I’ll be spending the bulk of my time. I think an interesting app to develop would be something with a shopping cart, so that I can send pricing updates to the clients. Alternatively, I might look into a chat application. I do think they would be a valuable tool to get some experience with, so if you have any ideas, please let me know!

📱 Progressive Web Apps (PWAs)

Progressive Web Apps (PWA) are a really cool technology. I used them for the first time in 2021 after hearing a talk by lemon at a conference. Until then, I hadn’t realized how far browser support had come. My coworker and I went back to our hotel room and immediately gave a go at converting an existing website to a PWA. It proved to be fairly easy to get past the initial steps. We quickly made a web app installable and to include some assets to be cached locally.

This yeah, I’m hoping to get to develop an app into a PWA with more extensive features. The primary feature I want to get experience with is queuing updates to be sent to the server while offline. I haven’t done a lot of state management with IndexedDB, but I’m going to want to figure that out quickly.

At one point, my personal website was a PWA and I’m going to try to get that back to being one. I have another blog where I talk about Tacos and it’s PWA written in Elm. I would like to update some features on that blog to work better for offline (like comments). If I do get the opportunity to work on a game, I might see about getting it published on the Microsoft Store and/or Google Play.

🛠 Tools & Misc Technology

This is a bit of a bucket of the other things i want to learn. I will probably learn both of these while working on another skill. And with any luck, I’ll be able to use some of them as part of my job.

💄 Tailwind CSS

Back in the day, I just had CSS. After a while, I finally got SASS, which felt like a miracle. I come from the old days of floating elements and praying. Honestly, although I’ve become familiar with the new features in CSS as they appear, I haven’t picked up any new CSS tooling since SASS. I have tried bootstrap a few times throughout the years, but it never really stuck. It seems like Tailwind has been generating a lot of buzz and I think their website is pretty. And having a pretty website can get me to do just about anything.

Tailwind CSS will likely find its way into one of my projects while learning another language. I think if I make a fun app or static website to learn Rust or Phoenix LiveView, I could pop it in. I’m somewhat hopeful that I’ll fall for it with all of my heart. But even if not, I might just get some inspiration in design.

⏱ InfluxDB + Telegraf

This probably deserves to be a bit higher on this list. My employer, InfluxData, develops the leading time-series database. A time-series database (TSDB) is a database purpose-built for timestamped data. We have a cool page about it here, if you want to learn more. I work on the E-Commerce team, so I don’t spend time with InfluxDB for work. However, I have a project idea involving my thermostat that will require a lot of timestamped data. While I probably could get by with a SQL or JSON db, I think a TSDB is serves analytics-style data really well.

InfluxData also develops the open source project Telegraf. Telegraf works as an ingest for a TSDB. Telegraf can even be used with other TSDBs, other than InfluxDB. I’ve actually had the opportunity to work with it once, and I found the experience to be really easy. I was able to authentication against an OAuth API, refresh the token, and pull data all with a single config file. It’s pretty sick!

🎓 Learning Technology Conclusion

Wish me luck! Again, I don’t believe I’ll be able to learn all of these, but I’m hoping to at least learn more about each of them. I imagine that new technologies and tools will present themselves as I continue learning. If something useful presents itself, I’ll work on that, of course.

Thanks so much for reading! Again, you can follow my learning over on my GitHub and sometimes on my twitter, @abshierjoel!

– Joel Abshier

This week I had received a code review from a teammate to add a @spec to a start_link function I wrote for a GenServer. My start_link function will take a keyword list, try to get the values :first_name and :last_name with defaults, and then call GenServer.start_link/2 with __MODULE__ as the module and those values as the initial state. This GenServer looks like this:

defmodule JoelServer do

use GenServer

def start_link(opts \\ []) do

first_name = Keyword.get(opts, :first_name, "Joel")

last_name = Keyword.get(opts, :last_name, "Abshier")

initial_state = %{

first_name: first_name,

last_name: last_name

}

GenServer.start_link(__MODULE__, initial_state, opts)

end

def init(state) do

{:ok, state}

end

endWhat I would like to do is add a @spec to describe how to use this function. Luckily, in Elixir we have Dialyzer to make this easy. Dialyzer is a static analysis tool within Erlang/Elixir. One feature of Dialyzer is to suggest @spec annotations for a function. Let’s see what it thinks for this:

@spec start_link(keyword) :: :ignore | {:error, any} | {:ok, pid}

def start_link(opts \\ []) do

...

endWhile that’s correct, a more specific @spec is valuable to us. Let’s look at how we can do that.

📨 Arguments / Input Type

Let’s take a look at the keyword list we’re passing in. It technically doesn’t matter to us which options are passed in, in addition to :first_name and :last_name, however if the spec were to define those specific keywords, we could catch any issues and/or typos while writing the code. Let’s define a private type that lists our keywords. First, add the typep definition and then update the @spec accordingly.

@typep start_opt :: {:first_name, String.t()} | {:last_name, String.t()}

@spec start_link(opts :: [start_opt]) :: :ignore | {:error, any} | {:ok, pid}

def start_link(opts \\ []) do

...

endNow if you try to call JoelServer.start_link(title: "Mr.") you’ll get a Dialyxir warning saying something like this:

([{title, <<77,114,46,32>>}]) breaks the contract (options::[start_opt()])That’s pretty cool! Now the next time someone on my team has to use this GenServer, they’ll be told exactly how to use it while calling it. But what about the return type?

📬 Output / Return Type

Now for the reason you’re here. A co-worker pointed me toward a type in the GenServer docs on_start/0. We can use this to replace the list of return types at :ignore | {:error, any} | {:ok, pid}. This lets us define our start_link with the return type as Elixir’s GenServer.start_link.

@spec start_link(opts :: [start_opt]) :: GenServer.on_start()I love this, rather than managing our own list of types for each GenServer I define. If you’re working with a Supervisor, we are also given Supervisor.on_start/0. If you want to allow any options allowed by a GenServer or Supervisor, you’ll also find options/0, option/0, init_option/0, and so on.

🐰 That’s all the GenServer we have today, folks

Anyhow, this might be pretty basic, if you’re familiar with Elixir. But I thought it was exciting to be told about these and wanted to share it. A lot of Elixir packages, especially the core library, have excellent hexdocs, so I’m going to make a habit of paying closer attention to the @specs on them for when I can take advantage of types like this.

I’ll probably be writing some more shorter posts like this. It’s a bit basic, but I’ll just make a Today I Learned category here on the blog, so you can check those out.

If you have any short tips like this I’d love to hear about them. I’m new to the language, so I’d love to learn! Thanks for reading! 🧪

– Joel Abshier

“Flow allows developers to express computations on collections, similar to the Enum and Stream modules, although computations will be executed in parallel using multiple GenStages” [Flow HexDocs]. This allows you to express common transformations (like filter/map/reduce) in nearly the same was as you would with the Enum module. However, because Flow is parallelized, it can allow you to process much larger data sets with better resource utilization, while not having to write case-specific, complex OTP code. Flow also can work with bounded and unbounded data sets, allowing you to collect data and pipe it through a Flow pipeline.

🥚 An Anecdotal Experience with Flow

Last summer, about 3 months into learning Elixir, I found myself in the middle of a project where I needed to collect product data from 5 different tables in a MSSQL Database and build a NoSQL database of products. I needed to process over 400,000 products daily. In addition to flattening the data, business rules for pricing, shipping restrictions, swatch options, etc. needed to be applied. We then shipped the data to our search provider, Algolia (amazing product btw). So let me use this story to make a case for Flow.

A co-worker of mine took an initial attempt at this project using NodeJS, but after getting about halfway through, we were already certain that it would not be performant enough. At this point we decided to develop the data processing pipeline in Elixir. Because we were both new to the language, we used Elixir’s Enum.map/2 and Enum.reduce/3 liberally, without any application of OTP. Surprisingly, we found that even with our extremely basic setup we were able to cut the time of the job down from over three hours to about 15 minutes.

Flow to the rescue!

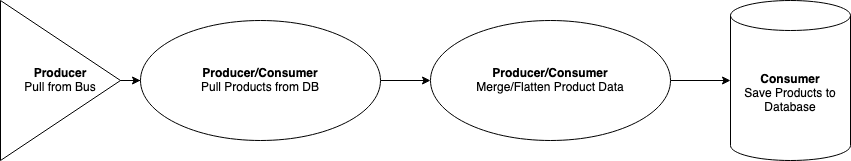

However, we still weren’t completely satisfied with the performance of the tool, and we wanted to apply OTP to make the processing of our products concurrent. After reading Concurrent Data Processing with Elixir we decided to use Elixir’s Flow package combined with a series of GenStages. Our GenStages were fairly simple, consisting of a producer responsible for pulling product ID’s from a service bus, a consumer/producer responsible for collecting the product data from the database tables, another consumer/producer responsible for applying business rules and flattening the product data, and a consumer responsible for saving the data to the NoSQL database.

We do most of the data transformations in the second producer/consumer. This is where we applied our business rules for pricing, shipping, etc. and flattened our data. Underneath this Producer/Consumer we used Flow to add more concurrency to the processing. After massaging some of the parameters for how many concurrent processes Flow will use, we were able to process all of our product data in about 30 seconds, down from over 3 hours from NodeJS (which isn’t really a fair comparison, but aren’t big numbers are fun).

🐣 Your First Flow Pipeline

Let’s take a look at the power that Flow can provide you without much overhead. In this example, we’re going to be looking at a dataset of information on every DC Comic Book release. You can download it for yourself over here to follow along. Let’s create an application to go through the dataset and get the list of comics written by each specific author. I’m going to treat comics with multiple authors as a unique author, for simplicity’s sake. Start by creating a new mix project:

mix new comic_flow --module ComicFlowLet’s navigate into the root directory and run iex:

cd /comic_flow

iex -S mixLeave that for now and let’s open up lib/comic_flow.ex. In here, you can remove all of the code within the module and replace it with a new function called get_writers.

defmodule ComicFlow do

def get_writers do

# TODO

end

endThe file we’re going to read is in CSV format, and that’s going to need decoding. Rather than try to figure that out, let’s add the CSV package from hex.pm to our mix.exs file.

{:csv, "~> 2.4"}Now, back in get_writers we can read the data a stream:

File.stream!("dc_comics.csv")And then we can call CSV.decode!/2:

File.stream!("dc_comics.csv")

|> CSV.decode!()This next step is a little bit nasty, but it’ll work for the sake of example. The 2nd column of our CSV is the name of the comic and the 7th is the writer. We’re going to reduce over the list of comics and create a map of authors with their list of comics. This gives us a final get_writers function that looks like this:

def get_writers do

File.stream!("dc_comics.csv")

|> CSV.decode!()

|> Enum.reduce(

%{},

fn [_cat, name, _link, _pencilers, _artists, _inkers, writer | _tail], acc ->

Map.update(acc, writer, [name], &[name | &1])

end

)

endLet’s test

Perfect! Let’s go back to our iex terminal and run this code. I’m going to time it using the Erlang :timer.tc function. To time function calls, I’m using this method. If you want to learn, that’ll take you about 2 minutes to read. I got an average time of ~171.25 seconds, with a minimum of 65 seconds and a max of 244.

Ouch. I’m not happy with that time, especially if the dataset gets larger. And if DC keeps releasing comics every year, it’s just going to get progressively worse. Enum has to load everything into memory in one fell swoop before reducing. When this happens, not many system resources are being used, as we’re just waiting for the entire stream to be read in. But what if there was a way reduce over our collection while the stream was still read in? That’s where Flow comes in. Let’s start by converting our stream into a Flow.

To Flow we Go

def get_writers do

File.stream!("dc_comics.csv")

|> CSV.decode!()

|> Flow.from_enumerable()

|> Flow.partition(

key: fn [_cat, _name, _link, _pencilers, _artists, _inkers, writer | _tail] ->

writer

end

)

endFlow.from_enumerable/1 does the work of converting our data into a Flow, using the collection as the producer for the pipeline. There are options to configure the parallelization of the pipeline, but for now we can leave it without arguments. The next step is the Flow.partition/1 function. Partition is probably the trickiest function to use in Flow. In a simple case, like a tuple, it will default to taking left as the key. In our case, we want to specific the key that the partitioning will happen on, which will create the right hash tables. I like to think of this as “creating a path to the key.” In our example, the data is just a list, so we’ll destructure writer and return it back as the key.

Enum.Reduce -> Flow.reduce

Finally, we’ll convert our Enum.reduce into Flow.reduce. The functions are largely the same, with the primary exception being that Flow’s reduce has a function to return the initial accumulator, as opposed to the enumeration given to Enum.reduce.

def get_writers do

File.stream!("dc_comics.csv")

|> CSV.decode!()

|> Flow.from_enumerable()

|> Flow.partition(

key: fn [_cat, _name, _link, _pencilers, _artists, _inkers, writer | _tail] ->

writer

end

)

|> Flow.reduce(

fn -> %{} end,

fn [_cat, name, _link, _pencilers, _artists, _inkers, writer | _tail], acc ->

Map.update(acc, writer, [name], &[name | &1])

end

)

|> Enum.to_list()

endAnd lastly you’ll notice that we called Enum.to_list() Calling this function will execute the Flow in parallel and return back the file result. You can also use Flow.run(), but it will return an atom indicating success/failure, rather than the data from the flow.

After changing to Flow, the time to run through all of DC comics is now averaging 2.902 seconds. That’s about 1.7% of our initial time! Wow! That’s pretty cool.

🐥 Unleash the Flow

On one hand, there is a lot more to learn with flow and I’m sure you’ll run into some odd situations along the way where you’ll have to duke it our with Flow.partitiion, but on the other hand, you’re now mostly equipped to start implementing reliable, concurrent code with Flow. And the best part is, you didn’t have to wrap your head around the systems required for this concurrency, you are simply able to utilize functions that are nearly identical to the functions you already use on collections delay.

I’ve personally had a ton of fun using Flow for work and for sport. Like all things, Flow isn’t a silver bullet, and there are many times I still need to write concurrent code. However, for many applications, using Flow would provide a path to running highly-concurrent code without having to juggle a lot of complexity and without having to pay the price to build those solutions from scratch. Hopefully you can find a place for Flow in your own projects!